Leaderboard

Popular Content

Showing content with the highest reputation on 03/22/20 in all areas

-

Hi everyone: I am Squids wife. I just wanted everyone to know he will be 50 on Sunday March 22nd, If you all can wish him a happy birthday that would be great.Due to Covid 19 - no party. Thanks Tracey5 points

-

Just caught onto this today (Thx @SpaceInvaderOne !), saw we're "only" #2, which just won't do --- Just "remembered" I have a Threadripper 2950x new in box - was going to sell the old dual Xeon E5 V2s and upgrade, but now going to bring this out & join the fray with the I9-9900 Hackintosh [AMD 580] and Ryzen 3700x. The threadripper will have to go "benchtop bare" for now, but that's OK. Should probably just use the office for a sauna now 🥵. Think the UPS is sweating a tad.... I am regional medical director for a company that does home medical visits on the sickest of the (US Medicare) population, IE top tier risk for COVID, avg. patient age 80+. We have offices in all the top affected cities in US so far. We're working nonstop to try to keep our patients safe at home. We've had to retreat temporarily to mostly telephonic visits due to shortage of PPE (protective gear) til our supply improves so we don't spread it to them - very frustrating. Now I can feel better about being stuck at home, still helping on the compute side as well til we get to get back safely in their homes. I wanted to thank everyone here for being so eager to take part / take action and with such impressive results. It means alot in the medical world to see folks being resourceful and doing their part. Please stay home, stay safe, and round up some more CPU's for this !2 points

-

The ease to set it up with docker probably played a huge role in that. It's set-it-and-forget-it (quite literally, this morning I was wondering who was watching Plex at home around 5am and then realised Plex and BOINC use the same cores 😅)2 points

-

BOINC team coming in at #2 in the world!!! https://boinc.bakerlab.org/rosetta/top_teams.php2 points

-

https://www.tomshardware.com/news/folding-at-home-worlds-top-supercomputers-coronavirus-covid-19 https://cointelegraph.com/news/foldinghome-surpasses-400-000-users-amid-crypto-contribution2 points

-

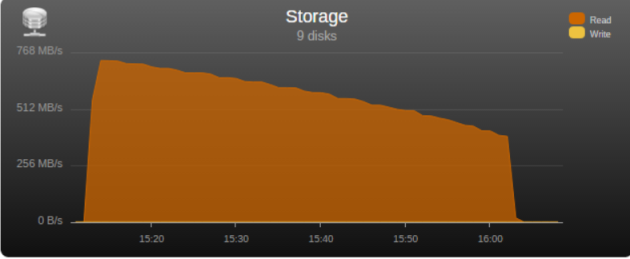

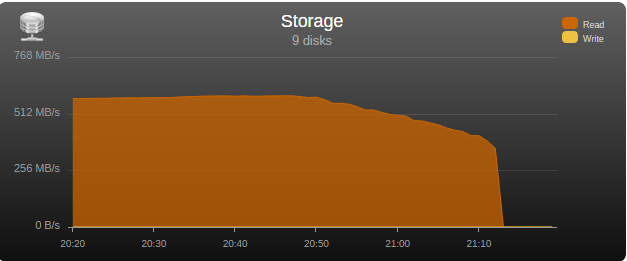

I had the opportunity to test the “real word” bandwidth of some commonly used controllers in the community, so I’m posting my results in the hopes that it may help some users choose a controller and others understand what may be limiting their parity check/sync speed. Note that these tests are only relevant for those operations, normal read/writes to the array are usually limited by hard disk or network speed. Next to each controller is its maximum theoretical throughput and my results depending on the number of disks connected, result is observed parity/read check speed using a fast SSD only array with Unraid V6 Values in green are the measured controller power consumption with all ports in use. 2 Port Controllers SIL 3132 PCIe gen1 x1 (250MB/s) 1 x 125MB/s 2 x 80MB/s Asmedia ASM1061 PCIe gen2 x1 (500MB/s) - e.g., SYBA SY-PEX40039 and other similar cards 1 x 375MB/s 2 x 206MB/s JMicron JMB582 PCIe gen3 x1 (985MB/s) - e.g., SYBA SI-PEX40148 and other similar cards 1 x 570MB/s 2 x 450MB/s 4 Port Controllers SIL 3114 PCI (133MB/s) 1 x 105MB/s 2 x 63.5MB/s 3 x 42.5MB/s 4 x 32MB/s Adaptec AAR-1430SA PCIe gen1 x4 (1000MB/s) 4 x 210MB/s Marvell 9215 PCIe gen2 x1 (500MB/s) - 2w - e.g., SYBA SI-PEX40064 and other similar cards (possible issues with virtualization) 2 x 200MB/s 3 x 140MB/s 4 x 100MB/s Marvell 9230 PCIe gen2 x2 (1000MB/s) - 2w - e.g., SYBA SI-PEX40057 and other similar cards (possible issues with virtualization) 2 x 375MB/s 3 x 255MB/s 4 x 204MB/s IBM H1110 PCIe gen2 x4 (2000MB/s) - LSI 2004 chipset, results should be the same as for an LSI 9211-4i and other similar controllers 2 x 570MB/s 3 x 500MB/s 4 x 375MB/s Asmedia ASM1064 PCIe gen3 x1 (985MB/s) - e.g., SYBA SI-PEX40156 and other similar cards 2 x 450MB/s 3 x 300MB/s 4 x 225MB/s Asmedia ASM1164 PCIe gen3 x2 (1970MB/s) - NOTE - not actually tested, performance inferred from the ASM1166 with up to 4 devices 2 x 565MB/s 3 x 565MB/s 4 x 445MB/s 5 and 6 Port Controllers JMicron JMB585 PCIe gen3 x2 (1970MB/s) - 2w - e.g., SYBA SI-PEX40139 and other similar cards 2 x 570MB/s 3 x 565MB/s 4 x 440MB/s 5 x 350MB/s Asmedia ASM1166 PCIe gen3 x2 (1970MB/s) - 2w 2 x 565MB/s 3 x 565MB/s 4 x 445MB/s 5 x 355MB/s 6 x 300MB/s 8 Port Controllers Supermicro AOC-SAT2-MV8 PCI-X (1067MB/s) 4 x 220MB/s (167MB/s*) 5 x 177.5MB/s (135MB/s*) 6 x 147.5MB/s (115MB/s*) 7 x 127MB/s (97MB/s*) 8 x 112MB/s (84MB/s*) * PCI-X 100Mhz slot (800MB/S) Supermicro AOC-SASLP-MV8 PCIe gen1 x4 (1000MB/s) - 6w 4 x 140MB/s 5 x 117MB/s 6 x 105MB/s 7 x 90MB/s 8 x 80MB/s Supermicro AOC-SAS2LP-MV8 PCIe gen2 x8 (4000MB/s) - 6w 4 x 340MB/s 6 x 345MB/s 8 x 320MB/s (205MB/s*, 200MB/s**) * PCIe gen2 x4 (2000MB/s) ** PCIe gen1 x8 (2000MB/s) LSI 9211-8i PCIe gen2 x8 (4000MB/s) - 6w – LSI 2008 chipset 4 x 565MB/s 6 x 465MB/s 8 x 330MB/s (190MB/s*, 185MB/s**) * PCIe gen2 x4 (2000MB/s) ** PCIe gen1 x8 (2000MB/s) LSI 9207-8i PCIe gen3 x8 (4800MB/s) - 9w - LSI 2308 chipset 8 x 565MB/s LSI 9300-8i PCIe gen3 x8 (4800MB/s with the SATA3 devices used for this test) - LSI 3008 chipset 8 x 565MB/s (425MB/s*, 380MB/s**) * PCIe gen3 x4 (3940MB/s) ** PCIe gen2 x8 (4000MB/s) SAS Expanders HP 6Gb (3Gb SATA) SAS Expander - 11w Single Link with LSI 9211-8i (1200MB/s*) 8 x 137.5MB/s 12 x 92.5MB/s 16 x 70MB/s 20 x 55MB/s 24 x 47.5MB/s Dual Link with LSI 9211-8i (2400MB/s*) 12 x 182.5MB/s 16 x 140MB/s 20 x 110MB/s 24 x 95MB/s * Half 6GB bandwidth because it only links @ 3Gb with SATA disks Intel® SAS2 Expander RES2SV240 - 10w Single Link with LSI 9211-8i (2400MB/s) 8 x 275MB/s 12 x 185MB/s 16 x 140MB/s (112MB/s*) 20 x 110MB/s (92MB/s*) * Avoid using slower linking speed disks with expanders, as it will bring total speed down, in this example 4 of the SSDs were SATA2, instead of all SATA3. Dual Link with LSI 9211-8i (4000MB/s) 12 x 235MB/s 16 x 185MB/s Dual Link with LSI 9207-8i (4800MB/s) 16 x 275MB/s LSI SAS3 expander (included on a Supermicro BPN-SAS3-826EL1 backplane) Single Link with LSI 9300-8i (tested with SATA3 devices, max usable bandwidth would be 2200MB/s, but with LSI's Databolt technology we can get almost SAS3 speeds) 8 x 500MB/s 12 x 340MB/s Dual Link with LSI 9300-8i (*) 10 x 510MB/s 12 x 460MB/s * tested with SATA3 devices, max usable bandwidth would be 4400MB/s, but with LSI's Databolt technology we can closer to SAS3 speeds, with SAS3 devices limit here would be the PCIe link, which should be around 6600-7000MB/s usable. HP 12G SAS3 EXPANDER (761879-001) Single Link with LSI 9300-8i (2400MB/s*) 8 x 270MB/s 12 x 180MB/s 16 x 135MB/s 20 x 110MB/s 24 x 90MB/s Dual Link with LSI 9300-8i (4800MB/s*) 10 x 420MB/s 12 x 360MB/s 16 x 270MB/s 20 x 220MB/s 24 x 180MB/s * tested with SATA3 devices, no Databolt or equivalent technology, at least not with an LSI HBA, with SAS3 devices limit here would be the around 4400MB/s with single link, and the PCIe slot with dual link, which should be around 6600-7000MB/s usable. Intel® SAS3 Expander RES3TV360 Single Link with LSI 9308-8i (*) 8 x 490MB/s 12 x 330MB/s 16 x 245MB/s 20 x 170MB/s 24 x 130MB/s 28 x 105MB/s Dual Link with LSI 9308-8i (*) 12 x 505MB/s 16 x 380MB/s 20 x 300MB/s 24 x 230MB/s 28 x 195MB/s * tested with SATA3 devices, PMC expander chip includes similar functionality to LSI's Databolt, with SAS3 devices limit here would be the around 4400MB/s with single link, and the PCIe slot with dual link, which should be around 6600-7000MB/s usable. Note: these results were after updating the expander firmware to latest available at this time (B057), it was noticeably slower with the older firmware that came with it. Sata 2 vs Sata 3 I see many times on the forum users asking if changing to Sata 3 controllers or disks would improve their speed, Sata 2 has enough bandwidth (between 265 and 275MB/s according to my tests) for the fastest disks currently on the market, if buying a new board or controller you should buy sata 3 for the future, but except for SSD use there’s no gain in changing your Sata 2 setup to Sata 3. Single vs. Dual Channel RAM In arrays with many disks, and especially with low “horsepower” CPUs, memory bandwidth can also have a big effect on parity check speed, obviously this will only make a difference if you’re not hitting a controller bottleneck, two examples with 24 drive arrays: Asus A88X-M PLUS with AMD A4-6300 dual core @ 3.7Ghz Single Channel – 99.1MB/s Dual Channel - 132.9MB/s Supermicro X9SCL-F with Intel G1620 dual core @ 2.7Ghz Single Channel – 131.8MB/s Dual Channel – 184.0MB/s DMI There is another bus that can be a bottleneck for Intel based boards, much more so than Sata 2, the DMI that connects the south bridge or PCH to the CPU. Socket 775, 1156 and 1366 use DMI 1.0, socket 1155, 1150 and 2011 use DMI 2.0, socket 1151 uses DMI 3.0 DMI 1.0 (1000MB/s) 4 x 180MB/s 5 x 140MB/s 6 x 120MB/s 8 x 100MB/s 10 x 85MB/s DMI 2.0 (2000MB/s) 4 x 270MB/s (Sata2 limit) 6 x 240MB/s 8 x 195MB/s 9 x 170MB/s 10 x 145MB/s 12 x 115MB/s 14 x 110MB/s DMI 3.0 (3940MB/s) 6 x 330MB/s (Onboard SATA only*) 10 X 297.5MB/s 12 x 250MB/s 16 X 185MB/s *Despite being DMI 3.0** , Skylake, Kaby Lake, Coffee Lake, Comet Lake and Alder Lake chipsets have a max combined bandwidth of approximately 2GB/s for the onboard SATA ports. **Except low end H110 and H310 chipsets which are only DMI 2.0, Z690 is DMI 4.0 and not yet tested by me, but except same result as the other Alder Lake chipsets. DMI 1.0 can be a bottleneck using only the onboard Sata ports, DMI 2.0 can limit users with all onboard ports used plus an additional controller onboard or on a PCIe slot that shares the DMI bus, in most home market boards only the graphics slot connects directly to CPU, all other slots go through the DMI (more top of the line boards, usually with SLI support, have at least 2 slots), server boards usually have 2 or 3 slots connected directly to the CPU, you should always use these slots first. You can see below the diagram for my X9SCL-F test server board, for the DMI 2.0 tests I used the 6 onboard ports plus one Adaptec 1430SA on PCIe slot 4. UMI (2000MB/s) - Used on most AMD APUs, equivalent to intel DMI 2.0 6 x 203MB/s 7 x 173MB/s 8 x 152MB/s Ryzen link - PCIe 3.0 x4 (3940MB/s) 6 x 467MB/s (Onboard SATA only) I think there are no big surprises and most results make sense and are in line with what I expected, exception maybe for the SASLP that should have the same bandwidth of the Adaptec 1430SA and is clearly slower, can limit a parity check with only 4 disks. I expect some variations in the results from other users due to different hardware and/or tunnable settings, but would be surprised if there are big differences, reply here if you can get a significant better speed with a specific controller. How to check and improve your parity check speed System Stats from Dynamix V6 Plugins is usually an easy way to find out if a parity check is bus limited, after the check finishes look at the storage graph, on an unlimited system it should start at a higher speed and gradually slow down as it goes to the disks slower inner tracks, on a limited system the graph will be flat at the beginning or totally flat for a worst-case scenario. See screenshots below for examples (arrays with mixed disk sizes will have speed jumps at the end of each one, but principle is the same). If you are not bus limited but still find your speed low, there’s a couple things worth trying: Diskspeed - your parity check speed can’t be faster than your slowest disk, a big advantage of Unraid is the possibility to mix different size disks, but this can lead to have an assortment of disk models and sizes, use this to find your slowest disks and when it’s time to upgrade replace these first. Tunables Tester - on some systems can increase the average speed 10 to 20Mb/s or more, on others makes little or no difference. That’s all I can think of, all suggestions welcome.1 point

-

Use extra Unraid CPU or GPU computing power to help take the fight to COVID-19 with BOINC or Folding@Home! https://unraid.net/blog/help-take-the-fight-to-covid-19-with-boinc-or-folding-home Stay safe everyone. -Spencer1 point

-

Disclosure The upcoming version of Unraid supports multi cache pools, and allows the user to create as many cache pools as needed. Each pool can consist of 1 up to 30 devices, and with a pro license, you are truly unlimited in number of devices to use.1 point

-

1 point

-

I was able to resolve the issue thanks to the help provided. To anyone else coming here like I did, before you try the suggestion of using the '../cache/..' directory, remove the 'garrysmod' directory from your appdata so that the container does a fresh install of the game files. No idea why it worked, but it does and that's what's important.1 point

-

1 point

-

If you click on the orange icon for the drive on the Dashboard then you will get a menu of which one option is to acknowledge the error. You then only get notified again if it changes.1 point

-

1 point

-

1 point

-

We must have some serious combined horsepower with our unRAID servers contributing to the effort. The unRAID team, despite the many who have joined, has thousands fewer members than other teams in close proximity. It's time to fire up another server with some spare parts. I feel guilty only throwing 15 cores at the effort. If I throw the spare parts together, I can add another 8 for at least 30 days on a trial license.1 point

-

https://forums.unraid.net/topic/57181-docker-faq/page/2/?tab=comments#comment-5660871 point

-

1 point

-

1 point

-

1 point

-

1 point

-

That looks about right to me considering the SSDs used, you need faster 3D TLC SSDs (860EVO, MX500, WD Blue 3D, etc), also higher capacity models, at least 250GB to get better than that.1 point

-

Thank you for the quick reply! Was running MariaDB set to Bridge and Nextcloud set to br0. Tried with both running in br0, but same issue... then changed both to bridge, and everything seems to work now1 point

-

Or for the gamer, 'I'm not getting old I'm just levelling up' [emoji16] Sent from my CLT-L09 using Tapatalk1 point

-

1 point

-

They utilize the cache pool or Unassigned Devices which doesn't use super.dat1 point

-

1 point

-

@Squid - Obviously I don't know you personally, but Happy Birthday anyway, and thanks for all that you do for the community. It is very much appreciated. Would love to see a pic including moose antlers...1 point

-

Well the "almost" is the fact, that I USED MY OWN DNS and config, I'm sorry if this annoyed you. Other than that exactly what was in the video. Thanks for reading....I got it working. Guess I'll use a more "newb" solution in the future.1 point

-

1 point

-

Happy birthday@Squid the big 'five o' eh its just a number, just keep chanting that [emoji16] Sent from my CLT-L09 using Tapatalk1 point

-

Happy birthday Squid!! 🎂🎂 No idea you were such an old guy , though I'm not that far behind you.1 point

-

1 point

-

1 point

-

1 point

-

Yes, that is the case with many ASRock Rack server boards. It depends on the CPU socket and chipset but many of them have no onboard audio. I don't think I have seen onboard audio on any socket 1150 or 1151 server motherboards. Xeon W and Threadripper server motherboards do have onboard audio. Some Supermicro server boards have onboard audio, including socket 1151 for the Xeon 2100/2200. The ASRock Rack "workstation" boards have onboard audio, but none of them have IPMI. Any audio device (onboard or otherwise) would show up in the IOMMU groups for the board. IOMMU group 0: [8086:3e31] 00:00.0 Host bridge: Intel Corporation Device 3e31 (rev 0d) IOMMU group 1: [8086:1901] 00:01.0 PCI bridge: Intel Corporation Xeon E3-1200 v5/E3-1500 v5/6th Gen Core Processor PCIe Controller (x16) (rev 0d) [8086:1905] 00:01.1 PCI bridge: Intel Corporation Xeon E3-1200 v5/E3-1500 v5/6th Gen Core Processor PCIe Controller (x8) (rev 0d) [1000:0072] 02:00.0 Serial Attached SCSI controller: Broadcom / LSI SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] (rev 03) IOMMU group 2: [8086:3e9a] 00:02.0 Display controller: Intel Corporation Device 3e9a (rev 02) IOMMU group 3: [8086:1911] 00:08.0 System peripheral: Intel Corporation Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th Gen Core Processor Gaussian Mixture Model IOMMU group 4: [8086:a379] 00:12.0 Signal processing controller: Intel Corporation Cannon Lake PCH Thermal Controller (rev 10) IOMMU group 5: [8086:a36d] 00:14.0 USB controller: Intel Corporation Cannon Lake PCH USB 3.1 xHCI Host Controller (rev 10) [8086:a36f] 00:14.2 RAM memory: Intel Corporation Cannon Lake PCH Shared SRAM (rev 10) IOMMU group 6: [8086:a368] 00:15.0 Serial bus controller [0c80]: Intel Corporation Cannon Lake PCH Serial IO I2C Controller #0 (rev 10) [8086:a369] 00:15.1 Serial bus controller [0c80]: Intel Corporation Cannon Lake PCH Serial IO I2C Controller #1 (rev 10) IOMMU group 7: [8086:a360] 00:16.0 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller (rev 10) [8086:a361] 00:16.1 Communication controller: Intel Corporation Device a361 (rev 10) [8086:a364] 00:16.4 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller #2 (rev 10) IOMMU group 8: [8086:a352] 00:17.0 SATA controller: Intel Corporation Cannon Lake PCH SATA AHCI Controller (rev 10) IOMMU group 9: [8086:a340] 00:1b.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #17 (rev f0) IOMMU group 10: [8086:a338] 00:1c.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #1 (rev f0) IOMMU group 11: [8086:a330] 00:1d.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #9 (rev f0) IOMMU group 12: [8086:a331] 00:1d.1 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #10 (rev f0) IOMMU group 13: [8086:a332] 00:1d.2 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #11 (rev f0) IOMMU group 14: [8086:a328] 00:1e.0 Communication controller: Intel Corporation Cannon Lake PCH Serial IO UART Host Controller (rev 10) IOMMU group 15: [8086:a309] 00:1f.0 ISA bridge: Intel Corporation Cannon Point-LP LPC Controller (rev 10) [8086:a323] 00:1f.4 SMBus: Intel Corporation Cannon Lake PCH SMBus Controller (rev 10) [8086:a324] 00:1f.5 Serial bus controller [0c80]: Intel Corporation Cannon Lake PCH SPI Controller (rev 10) IOMMU group 16: [144d:a808] 04:00.0 Non-Volatile memory controller: Samsung Electronics Co Ltd NVMe SSD Controller SM981/PM981/PM983 IOMMU group 17: [8086:1533] 05:00.0 Ethernet controller: Intel Corporation I210 Gigabit Network Connection (rev 03) IOMMU group 18: [1a03:1150] 06:00.0 PCI bridge: ASPEED Technology, Inc. AST1150 PCI-to-PCI Bridge (rev 04) [1a03:2000] 07:00.0 VGA compatible controller: ASPEED Technology, Inc. ASPEED Graphics Family (rev 41) IOMMU group 19: [8086:1533] 08:00.0 Ethernet controller: Intel Corporation I210 Gigabit Network Connection (rev 03) You may have to add a PCIe audio card for audio to a VM.1 point

-

1 point

-

1 point

-

Happy Birthday. I never thought of squids being over 3 or 4 years old. Hope you have a wonderful day.1 point

-

Happy birthday @Squid Since I'm in the future, I can already wish you a happy birthday 🎂 I'm going to have a big party, as usual all alone, for my own birthday on monday...1 point

-

New Config will only (optionally) rebuild parity. If you think parity is already valid then you can check a box when you go to start the array telling it parity is valid and it won't even rebuild parity. No other disk will be changed in any case. The reason for the warning is because if someone has a data disk that needs to be rebuilt, then doing New Config makes it forget that data disk needs to be rebuilt. The main danger from New Config is accidentally assigning a data disk to the parity slot, thus overwriting that data with parity.1 point

-

This is fine, but it honestly has me reconsidering unraid as a viable platform for me. A bit too late in the game now as it will require substantial financial resources to migrate to a different platform. I know you, nor CA is affiliated with Limtech or Unraid, but its a pretty integral part of the user experience and its now tainted. And it's taken since last wednesday for me to come to this decision as a result. Hence the long gap between the event and me posting about it. I realized it happened. I had to think long and hard about how I wanted to proceed.1 point

-

I'm not sure which application in the Community Apps library was responsible for the popup alert about COVID-19 support, but I will be uninstalling all CA packages as a result. It was invasive and I'm not okay with that. I get it was a good gesture, and its got some serious circumstances behind it - but I like it when other people aren't touching my systems. I'm even okay with pinning the apps to the top of the list like they are. Just not the invasive nature of the popup and warning banner. Felt like I was visiting a webpage with my adblocker off.1 point

-

I did this just yesterday. First check everything is running normally and healthy. Dont do this if something is already missing or running from parity. That would sound like a badn thing to do. I also took a screenshot of the main page of all my disks so I could see what they currently were and double check them. How I did this was by turning off unraid (my array was healthy I just didnt want a disk), then removing the disk I no longer want. Unraid then boots up and does not start the array because of a missing disk. I then chose tools-new config and opted to preserve all the current disk assignments. I could then remove the disk from the new assignments. Once done I then saved that and started the array. All seemed to work fine. P1 point

-

Yes, in the template, hit remove next to the variable. If you try to blank it out, it will default to abc/abc1 point

-

1 point

-

I'll take a look, but for a moment imagine somebody like me read the blog post, wanting to contribute, I come to the forum, I see pages and pages of posts, with no single place pointing to instructions, after reading a couple pages, you know what they do, they leave. Update: I added the same runtime and device parameters as for my Plex docker, no web UI, event the app says web ui is broken, folding at home forum post is 6 pages long, no single point of instructions.1 point

-

Once I heard about F@H having coronavirus work units I went ahead and installed it on my work laptop (running full tilt all the time), my main windows driver, and my HTPC. While it is immaterial what team you use (if you even want one), if you do choose, unRaid's F@H team number is 227802. If you do happen to be one of those persons who feel that this isnt a big deal, that choice is yours, but bear in mind that donating some spare cycles costs you nothing and can only help.1 point

-

I'm now on q35 v2.12 and have added "pcie_no_flr=1022:149c,1022:1487". Two shutdowns and starts without any issue. I will test later again1 point

-

Hi Guys, I setup the reverse proxy with some help of the great videos of Spaceinvader One. But there are some extra security options that I want to be fixed but no idea how I can fix that. Hopefully some one here can help me out! 1. create a redirection for all the reverse proxy dockers. What I have tried is changing the unifi-controller.subdomain.conf file of the docker located in the appdata folder "appdata\letsencrypt\nginx\proxy-confs" if i type https://unifi.domain.com everthing is working fine. But I want to enter http://unifi.domain.com end auto redirect to https://unifi.domain.com 2. setup / enable fail2ban service that is integrated in the Letsencrypt docker from Linuxserver 3. setup / enable GeoIP service that is integrated in the Letsencrypt docker from Linuxserver Thx1 point

-

Hello, Possible to increase this 30 disks limit ? Wanted to "join" my servers with HBA card so that 30 disks limit will be reached quickly Thanks1 point